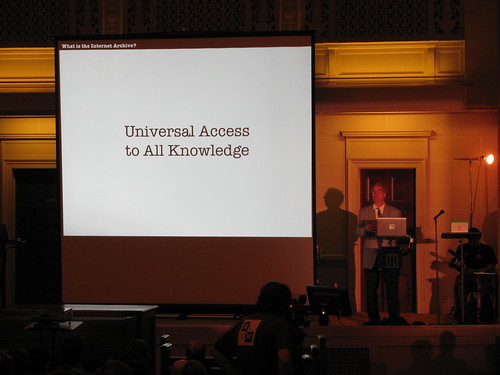

After posting DRM and the Churches of Universal Access to All Knowledge’s strategic plans I noticed some other mentions of DRM and BookServer/Internet Archive/Open Library. I’m dropping them here with a little bit of added commentary.

First there’s my microcarping at the launch event (2009-10-29, over 2.5 years ago). Fran Toolan blogged about the event and had a very different reaction:

The last demonstration was not a new one to me, but Raj came back on and he and Brewster demonstrated how using the Adobe ACS4 server technology, digital books can be borrowed, and protected from being over borrowed from libraries everywhere. First Brewster demonstrated the borrowing process, and then Raj tried to borrow the same book but found he couldn’t because it was already checked out. In a tip of the hat to Sony, Brewster then downloaded his borrowed text to his Sony Reader. This model protects the practice of libraries buying copies of books from publishers, and only loaning out what they have to loan. (Contrary to many publishers fears that it’s too easy to “loan†unlimited copies of e-Books from libraries).

As you’ll see (and saw in the screenshot last post) a common approach is to state that some Adobe “technology” or “software” is involved, but not say DRM.

A CNET story covering the announcement doesn’t even hint at DRM, but it does have a quote from Internet Archive founder Brewster Kahle that gives some insight into why they’re taking the approach they have (in line with what I said previous post, and see accompanying picture there):

“We’ve now gotten universal access to free (content),” Kahle added. “Now it’s time to get universal access to all knowledge, and not all of this will be free.”

A report from David Rothman missed the DRM entirely, but understands it lurks at least as an issue:

There’s also the pesky DRM question. Will the master searcher provide detailed rights information, and what if publishers insist on DRM, which is anathema to Brewster? How to handle server-dependent DRM, or will such file be hosted on publisher sites?

Apparently it isn’t, and Adobe technology to the rescue!

Nancy Herther noted DRM:

Kahle and his associates are approaching this from the perspective of creating standards and processes acceptable to all stakeholders-and that includes fair attention to digital rights management issues (DRM). […] IA’s focus is more on developing a neutral platform acceptable to all key parties and less on mapping out the digitization of the world’s books and hoping the DRM issues resolve themselves.

The first chagrined mention of DRM that I could find came over 8 months later from Petter Næss:

Quotable: “I figure libraries are one of the major pillars of civilization, and in almost every case what librarians want is what they should get” (Stewart Brand)

Bit strange to hear Brand waxing so charitable about about a system that uses DRM, given his EFF credentials, but so it goes.

2011-01-09 maiki wrote that a book page on the Open Library site claimed that “Adobe ePUB Book Rights” do not permit “reading aloud” (conjure a DRM helmet with full mask to make that literally true). I can’t replicate that screen (capture at the link). Did Open Library provide more up-front information then than it does now?

2011-03-18 waltguy posted the most critical piece I’ve seen, but closes granting the possibility of good strategy:

It looks very much like the very controlled lending model imposed by publishers on libraries. Not only does the DRM software guard against unauthorized duplication. But the one user at a time restriction means that libraries have to spend more money for additional licences to serve multiple patrons simultaneously. Just like they would have to buy more print copies if they wanted to do that.

[…]

But then why would the Open Library want to adopt such a backward-looking model for their foray into facilitating library lending of ebooks ? They do mention some advantages of scale that may benefit the nostly public libraries that have joined.

[…]

However, even give the restrictions, it may be a very smart attempt to create an open-source motivated presence in the commercial-publisher-dominated field of copyrighted ebooks distribution. Better to be part of the game to be able to influence it’s future direction, even if you look stodgy.

2011-04-15 Nate Hoffelder noted concerning a recent addition to OpenLibrary:

eBooks can be checked out from The Open Library for a period of 2 weeks. Unfortunately, this means that Smashwords eBooks now have DRM. It’s built into the system that the Open Library licensed from Overdrive, the digital library service.

In a comment, George Oates from Open Library clarified:

Hello. We thought it might be worth correcting this statement. We haven’t licensed anything from Overdrive. When you borrow a book from the Open Library lending library, there are 3 ways you can consume the book:

1) Using our BookReader software, right in the browser, nothing to download,

2) As a PDF, which does require installing the Adobe Digital Editions (ADE) software, to manage the loan (and yes, DRM), or

3) As an ePub, which also requires consumption of the book within ADE.

Just wanted to clarify that there is no licensing relationship with Overdrive, though Overdrive also manages loans using ADE. (And, if we don’t have the book available to borrow through Open Library, we link through to the Overdrive system where we know an Overdrive identifier, and so can construct a link into overdrive.com.)

This is the first use of the term “DRM” by an Internet Archive/Open Library person in connection with the service that I’ve seen (though I’d be very surprised if it was actually the first).

2011-05-04 and again 2012-02-05 Sarah Houghton mentions Open Library very favorably in posts lambasting DRM. I agree that DRM is negative and Open Library positive, but find it just a bit odd in such a post to promote a “better model” that…also uses DRM. (Granted, not every post needs to state all relevant caveats.)

2011-06-25 the Internet Archive made an announcement about expanding OpenLibrary book lending:

Any OpenLibrary.org account holder can borrow up to 5 eBooks at a time, for up to 2 weeks. Books can only be borrowed by one person at a time. People can choose to borrow either an in-browser version (viewed using the Internet Archive’s BookReader web application), or a PDF or ePub version, managed by the free Adobe Digital Editions software. This new technology follows the lead of the Google eBookstore, which sells books from many publishers to be read using Google’s books-in-browsers technology. Readers can use laptops, library computers and tablet devices, including the iPad.

blogged about the announcement, using the three characters:

The open Library functions in much the same way as OverDrive. Library patrons can check out up to 5 titles at a time for a period of 2 weeks. The ebooks can be read online or on any Device or app that supports Adobe DE DRM.

2011-07-05 a public library in Kentucky posted:

The Open Library is a digital library with an enormous ammount of DRM free digital books. The books are multiple formats, ranging from PDF to plain text for the Dial-up users out there. We hope you check them out!

That’s all true, Open Library does have an enormous amount of DRM-free digital books. And a number of restricted ones.

2011-08-13 Vic Richardson posted an as far as I can tell accurate description for general readers.

Yesterday (2012-05-08) Peter Brantley of the Internet Archive answered a question about how library ebook purchases differ from individual purchases. I’ll just quote the whole thing:

Karen, this is a good question. Because ebooks are digital files, they need to be hosted somewhere in order to be made available to individuals. When you buy from Amazon, they are hosting the file for the publisher, and permit its download when you purchase it. For a library to support borrowing, it has to have the ebook file hosted on its behalf, as most libraries lack deep technical expertise; traditionally this is done by a service provider such as Overdrive. What the Internet Archive, Califa (California public library consortium), and Douglas County, Colorado are trying to do is host those files directly for their patrons. To do that, we need to get the files direct from the publisher or their intermediary distributor — in essence, we are playing the role of Amazon or Barnes & Noble, except that as a library we want people to be able to borrow for free. This sounds complicated, and it is, but then we have to introduce DRM, which is a technical protection measure that a library ebook provider has to implement in order to assure publishers that they are not risking an unacceptable loss of sales. DRM complicates the user experience considerably.

My closing comment-or-so: Keep in mind that it is difficult for libraries to purchase restricted copies when digesting good news about a publisher planning to drop DRM. The death of DRM would be good news indeed, but inevitable (for books)? I doubt it. My sense is that each step forward against DRM has been matched by two (often silent) steps back.